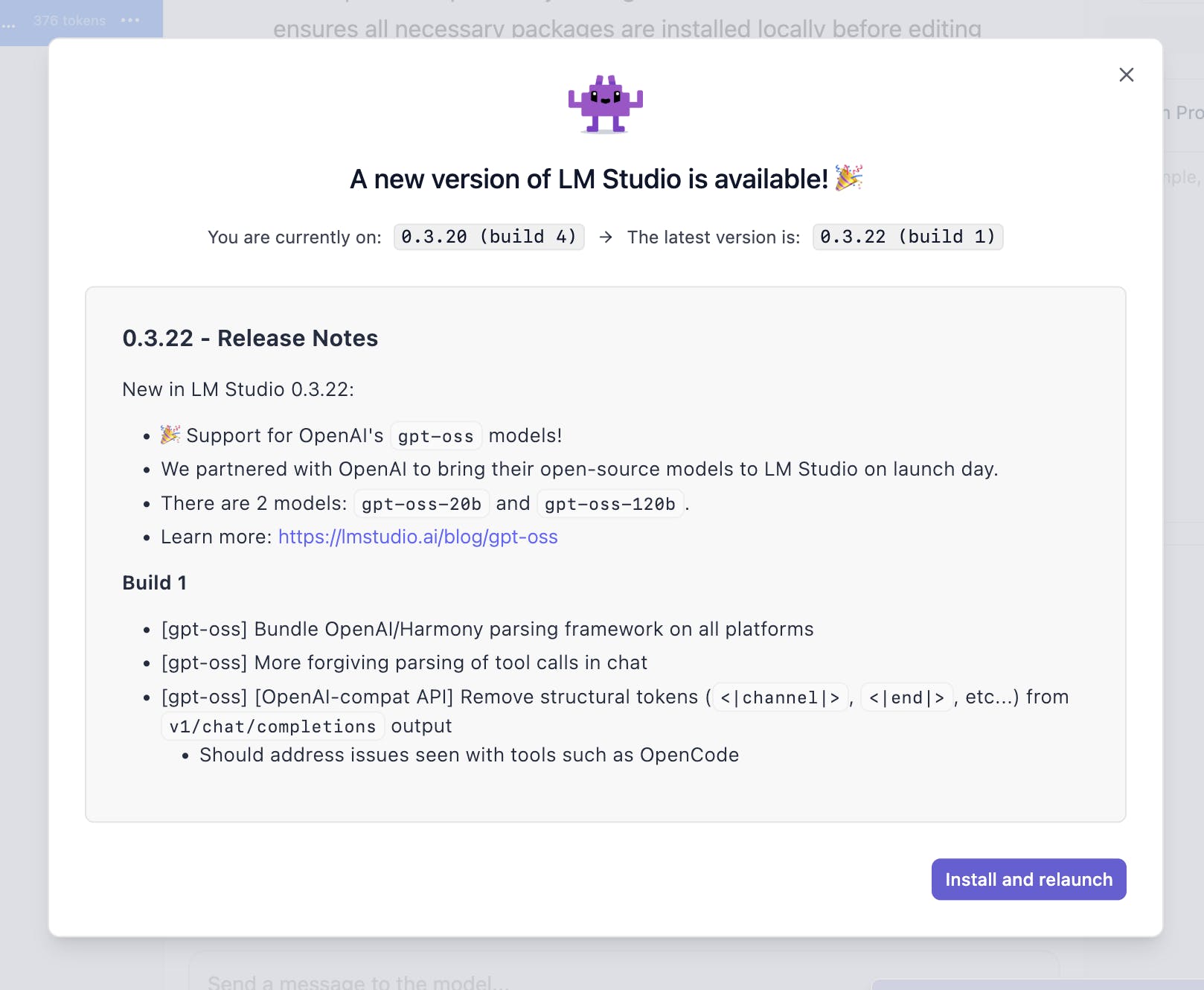

Run OpenAI's gpt-oss locally in LM Studio

Want to run @OpenAI's open models locally? Now you can with LM Studio!

The model comes in 2 sizes: 20B and 120B.

Around ~13GB of RAM is needed for the smaller model.

gpt-oss-20b — for lower latency, and local or specialized use-cases (21B parameters with 3.6B active parameters)

gpt-oss-120b — for production, general purpose, high reasoning use-cases that fits into a single H100 GPU (117B parameters with 5.1B active parameters)

149 views

Replies

Dereference

This is huge. Running serious models locally without relying on cloud APIs opens up a ton of possibilities for speed, privacy, and control.

What kind of workflows are you most excited to unlock with this setup?

I like how @Ollama also shows these new models by default on the new update.

Around ~13GB of RAM is needed for the smaller model. = RAM or GPU?