Launched on HackerNews: What Happened and What I Learned

Why LangFast exists

I shipped LangFast out of frustration with the existing tools.

Prompt testing and LLM evaluations should be simple and straightforward. All I wanted was a simple way to test prompts with variables and jinja2 templates across different models, ideally something I could open during a call, run few tests, and share results with my team.

But every tool I tried hit me with a clunky UI, required login and API keys, or forced a lengthy setup process.

And that's not all. Then came the pricing.

The last quote I got for one of the tools on the market was $6,000/year for a team of 16 people in a use-it-or-loose-it way. For a tool we use maybe 2–3 times per sprint. That’s just ridiculous!

IMO, it should be something more like JSFiddle. A simple prompt playground that does not require you to signup, does not require API keys, and let's experiment instantly, i.e. you just enter a browser URL and start working. Like JSFiddle has. And mainly, something that costs me nothing if I'm or my team is not using it.

Eventually I just made it myself.

Goals for the launch

The goal of your Hacker News launch was to test whether there is genuine interest in LangFast. Not to sell, not to monetize yet, but to validate:

Do people resonate with the problem (“prompt testing is clunky”)?

Will they click, try, and spend time in the product?

Does the product idea have potential outside my own company (Yola.com) use case?

It was market validation experiment: a way to measure awareness (visits, comments, reactions) and initial activation potential (people actually running prompts) before investing further.

Setup at launch

At launch I had:

No landing page. Just a sidebar saying “LangFast is your go-to prompt playground” and a a list of key features.

No onboarding video or demo. Just a default prompt example when you open the app.

No sign-up required. Users could create their accounts optionally when feeling ready and a need.

No payments. It'd be great to have it at launch, but I was still in the process of passing verification in Paddle and did not want to block the launch.

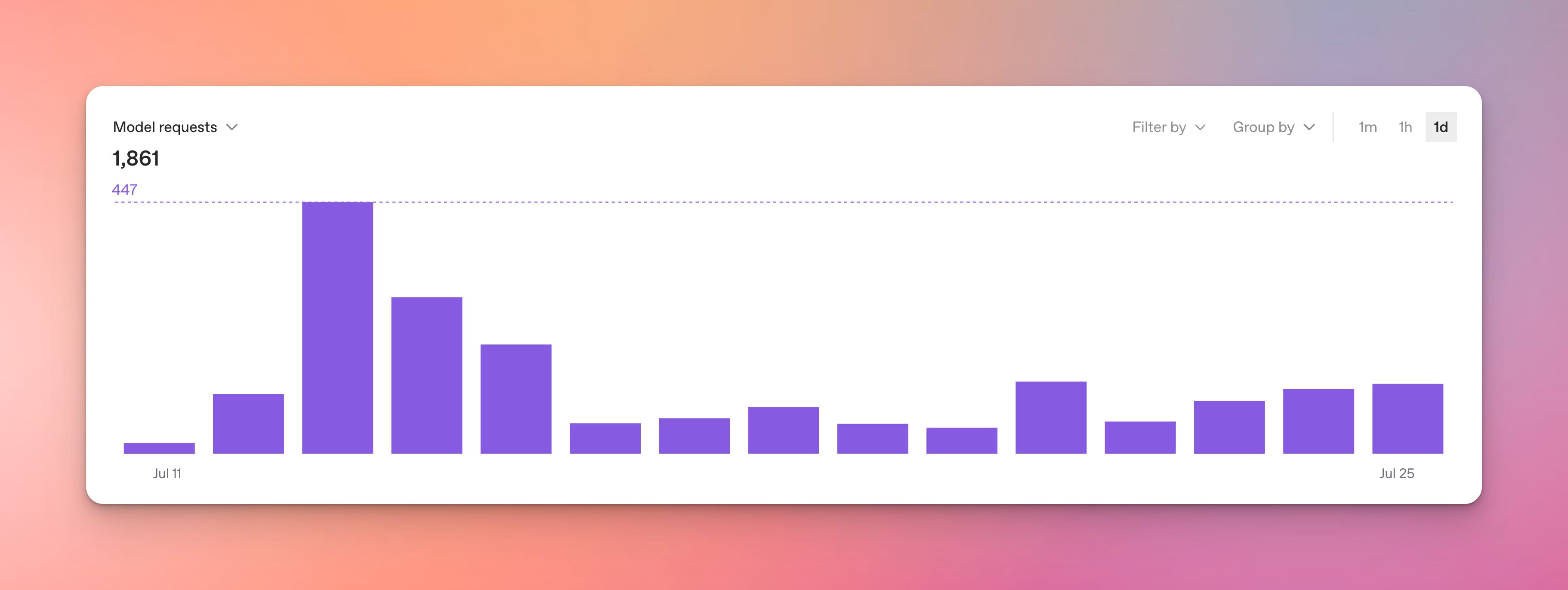

Basic analytics. DataFast for visitor analytics, and OpenAI dashboard for usage analytics.

Launch day and the spike

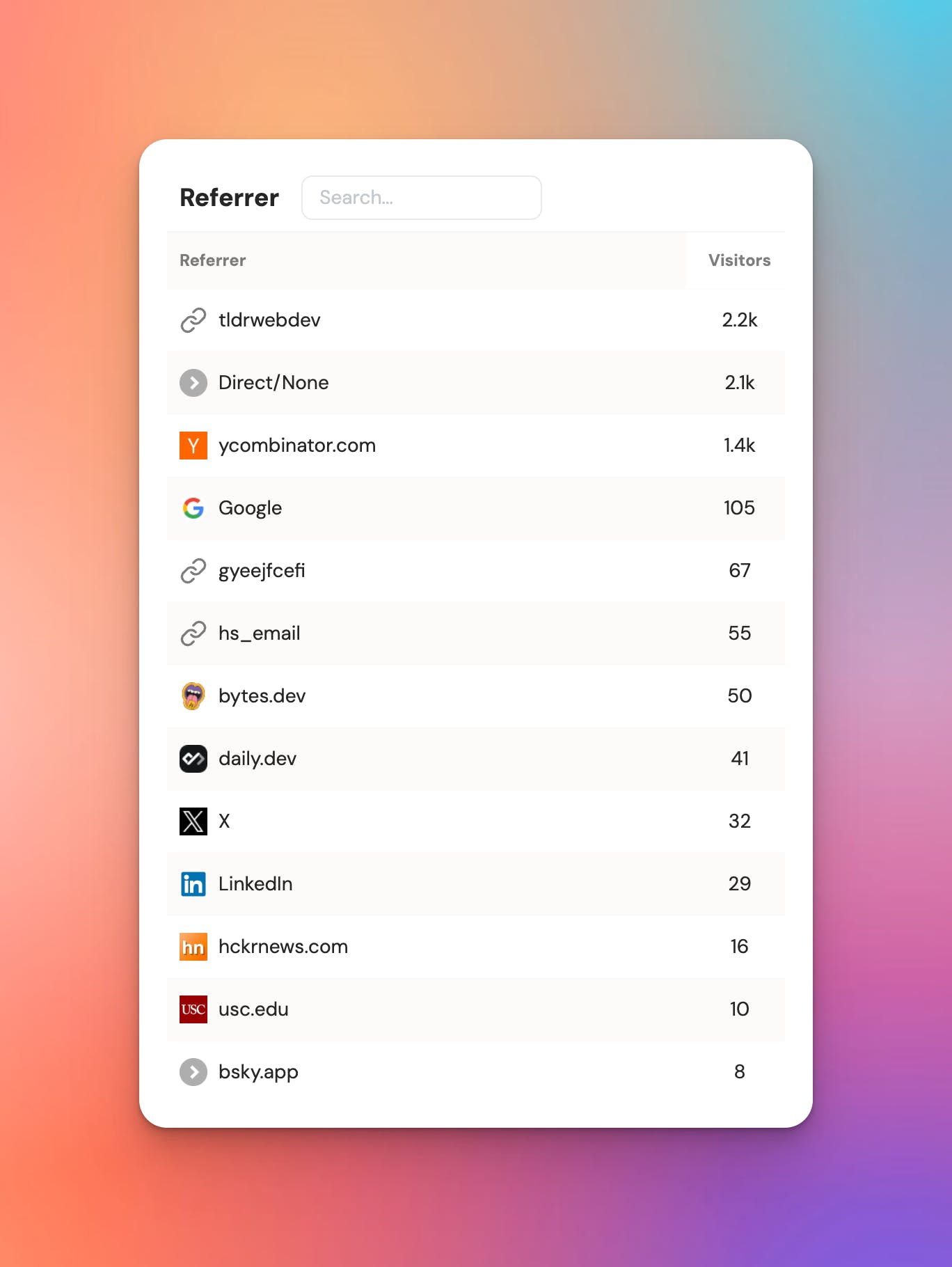

I've launched it in the Show HN section with the following post and additionally echoed it on Reddit in r/SideProject, and my LinkedIn and Twitter pages.

The traffic and engagement came fast.

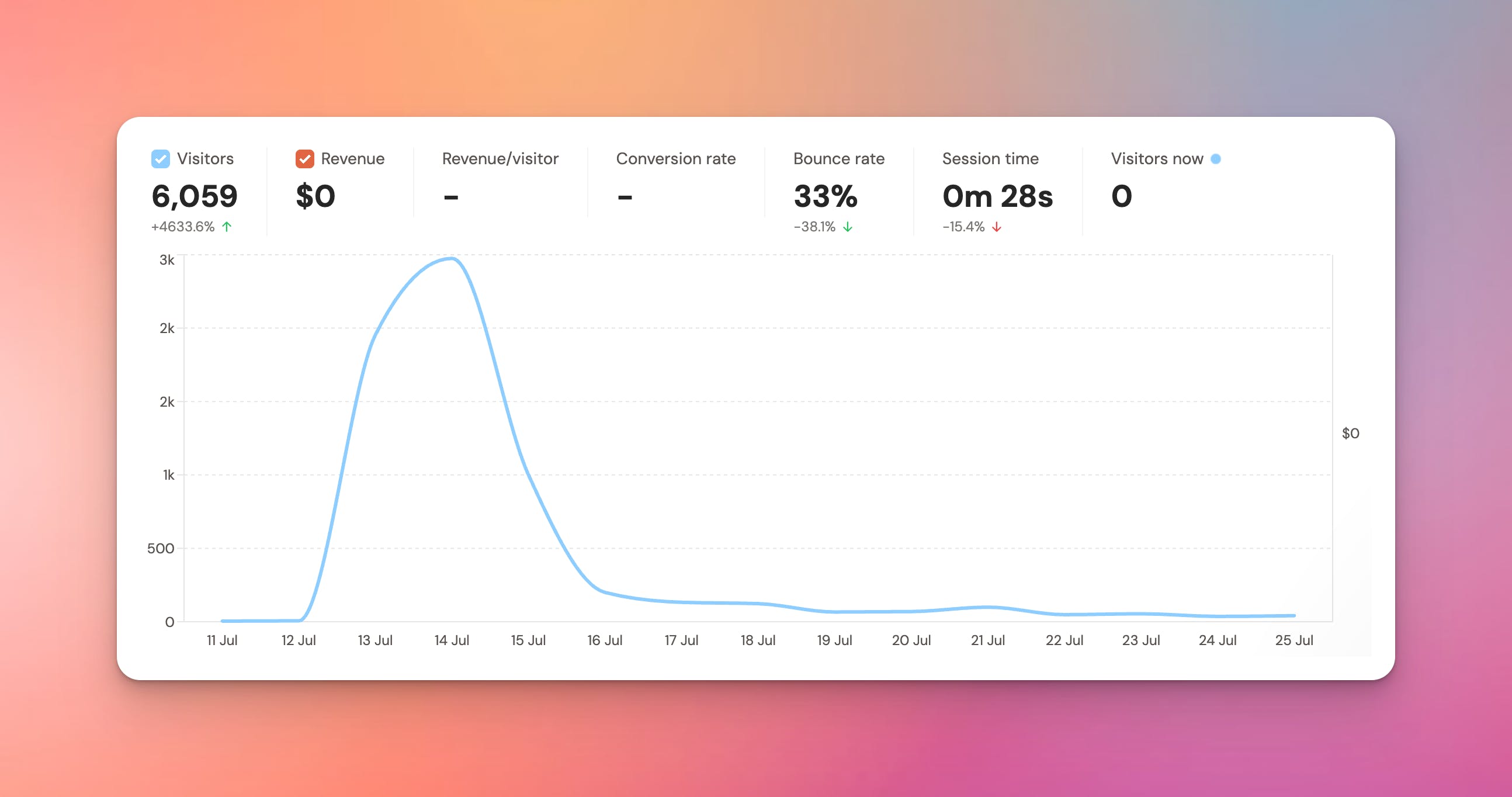

Top-line results:

6,000+ visits

~200 users tried it

~80 users signed up

$0 at launch by design

$60 a month later (one paying customer, not from this launch)

It also was quite surprising for me to see how other platforms, and newsletters have (I think automatically) picked up my launch and additionally promoted LangFast in their channels:

OpenAI usage logs also showed spikes, but unfortunately a few weeks later, I can conclude that the users I've received did not stick to the app.

The good signals

Some people understand and have the same pain:

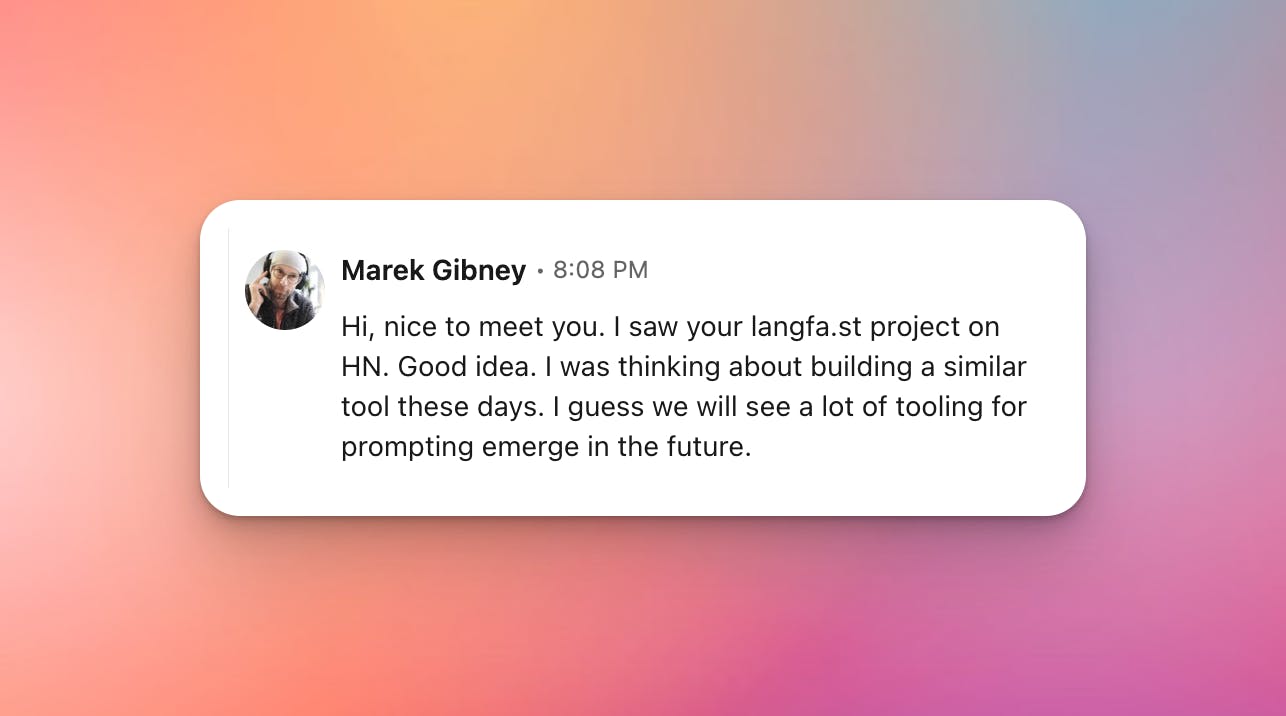

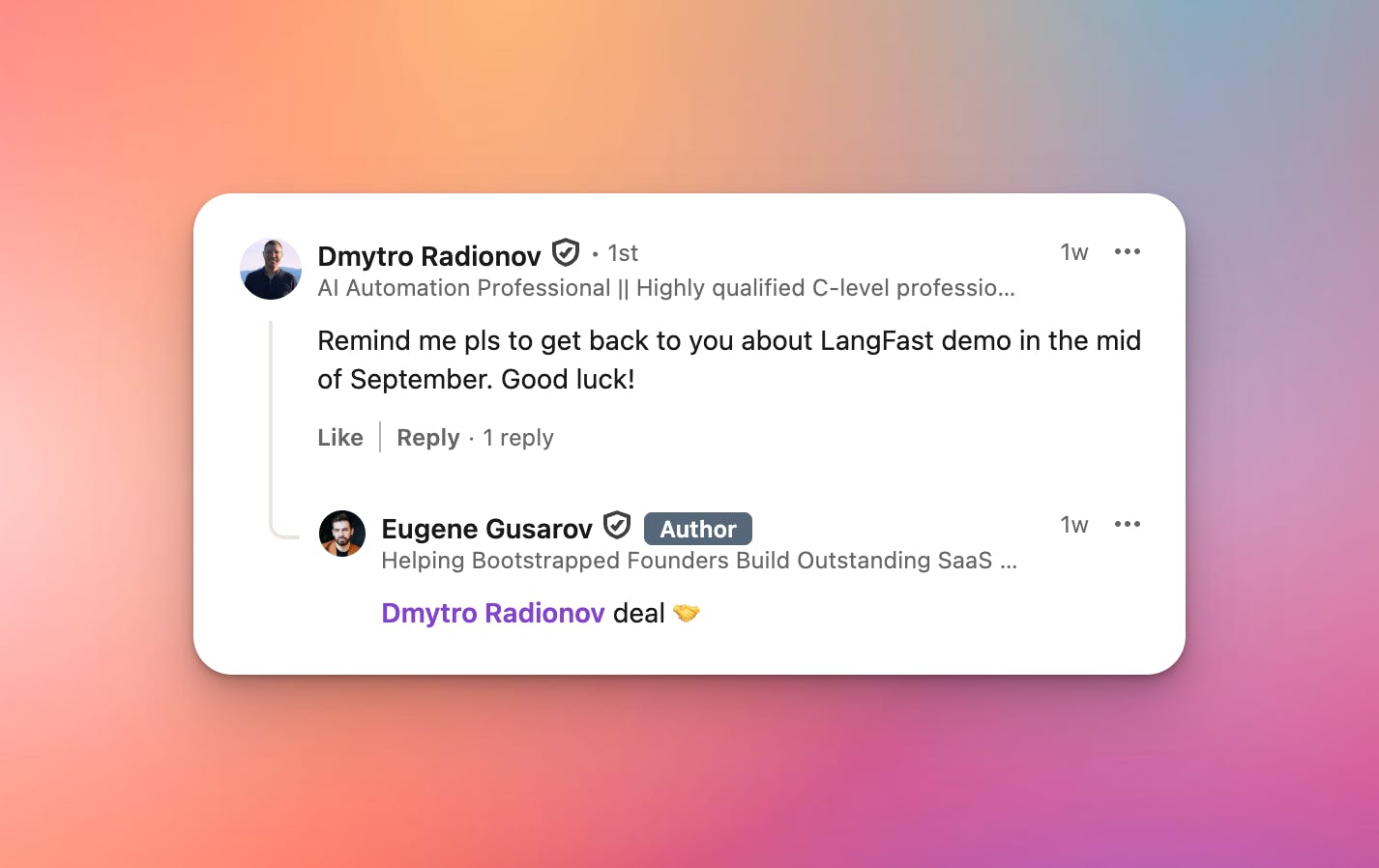

Some even cared enough to find and reach out to me directly on LinkedIn:

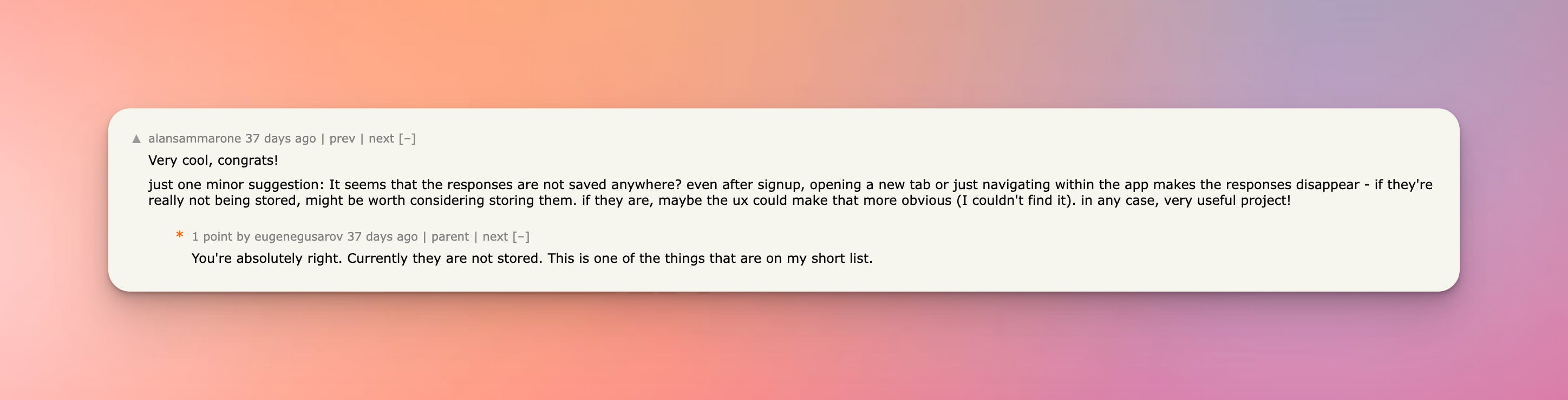

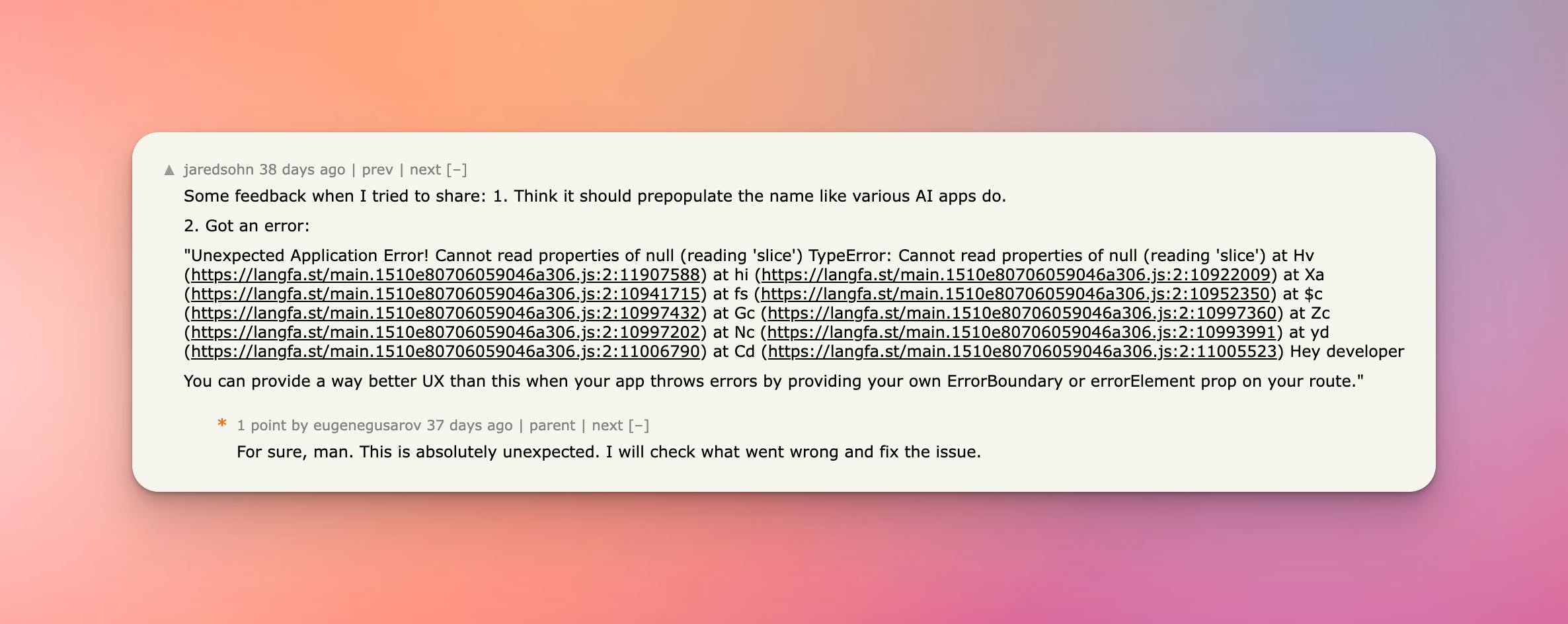

I have also received some legitimate feedback:

Awareness was real. Folks discovered the tool, clicked, looked around.

The hard truth

Activation and retention were weak. In my case activation means people run prompts with their own examples and save/share results. That didn’t happen enough.

A rough conversion:

Visit → user ≈ 200 / 6,500 ≈ 3.1%

Healthy ongoing usage after the spike: not visible

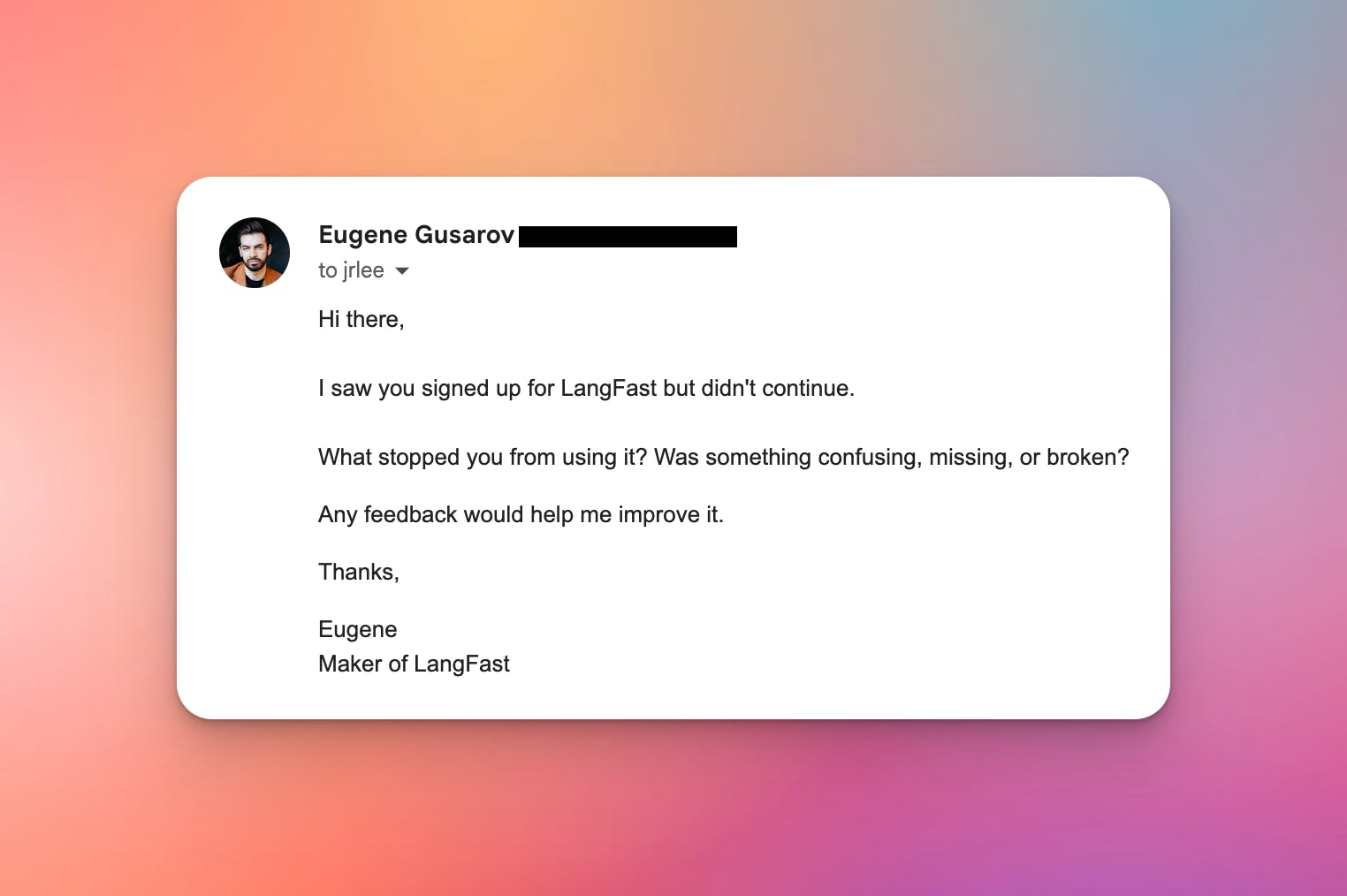

I have also reached out to 70+ users who signed up to get their feedback:

70+ emails sent → 0 replies (likely deliverability + low perceived value of the ask)

Why it happened (my read)

Poor targeting: I've launched on broad user profile platforms – HackerNews (developer and tech enthusiasts), LinkedIn (tech specialists in my case), Reddit (who know who's in r/SideProject), and Twitter (90+ followers + Build In Public community)

Poor onboarding: I underestimated the importance of this in light of launching LangFast to a broad audience. Things could have gone better if I had at least a landing page and a video demo of me explaining how to use it.

Lack of a hook: The app is a weekly/bi-weekly tool by nature, I'm lacking a strong “come back because you and your team needs this” hook.

Insufficient analytics: I did not have any funnel analytics and key event tracking implemented per account. This significantly limited my ability to find the outliers and power users inside the app.

Insights and considerations

Message > mechanics. People won’t explore until they believe the tool solves their specific job.

“No sign-up” is great for trials, bad for learning. Gate save/share so qualified users identify themselves.

HN validates curiosity, not fit. Treat it as an attention test, then prove activation on a narrower ICP.

Outreach must meet timing. If they tried it once and left, your best chance is an in-app question during that visit, not an email three days later.

Conclusions

The pain is real and exists in a broader market.

The launch proved I can get attention.

The product does not create enough stickiness yet. I need to understand what's missing.

The product does not have an activation hook yet. I need to identify what it is.

Next steps

Do's:

Improve analytics to make it possible to find outliers and power users

Add a landing page and a demo to explain the product

Find and test more targeted channels: SEO and niche communities

Continue talking about the product and my learnings on IndieHackers, Reddit, ProductHunt, Twitter and LinkedIn.

Dont's:

Do not add more features for the sake of more features. Prioritize add those based on the user feedback first: store execution logs, support of image input & outputs, support of Response API, add evaluators.

Do not launch on ProductHunt until I have sufficient stickiness in the app.

Replies