Do you trust AI more than humans?

I noticed an interesting pattern in my surroundings:

People are very sensitive about their data (GDPR, etc.)

But the same people are willing to share their health, partner problems, intimate relationships, etc., with LLM.

Chat GPT becomes a therapist.

Why do people trust AI so much, even though they are uncomfortable sharing sensitive data?

I understand that AI can be more tolerant of answers and create a certain sense of security, but it is still a system that can be hacked.

Aren't we sharing too much with artificial intelligence?

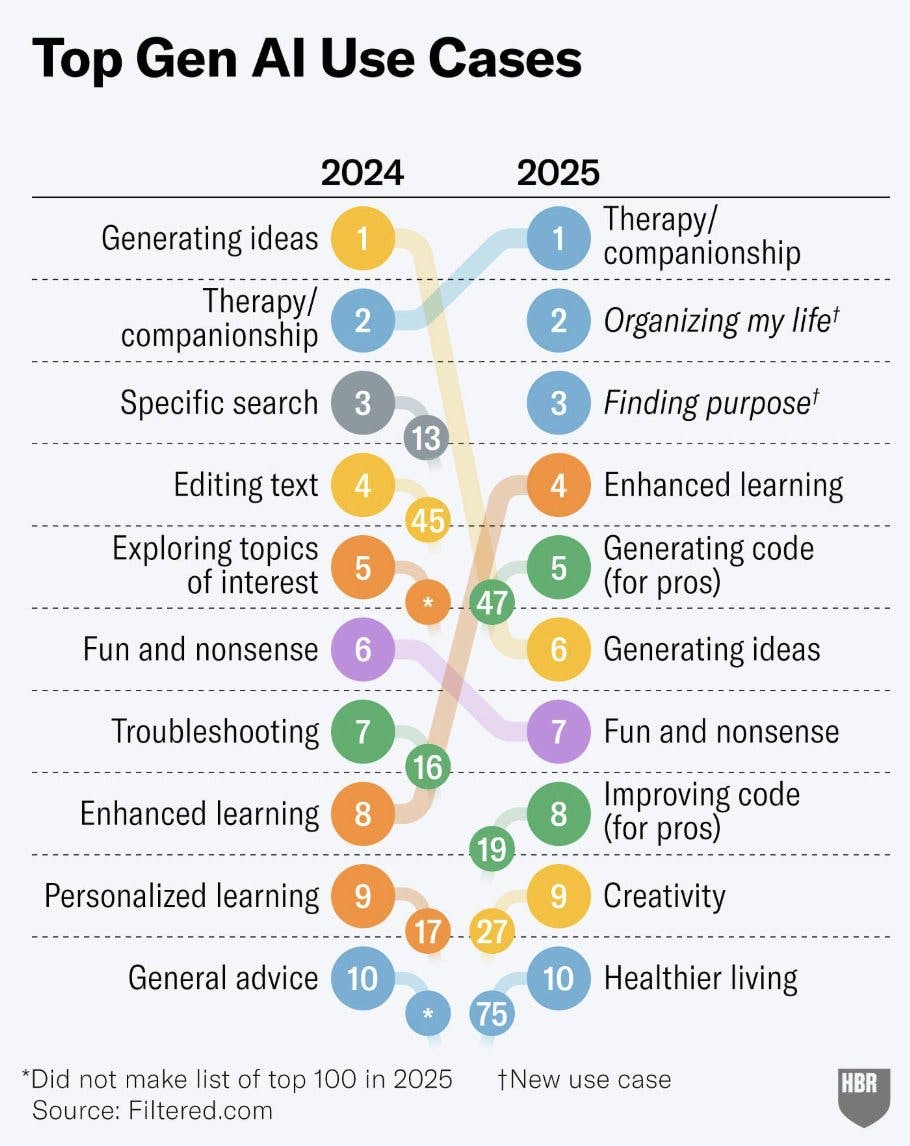

The last thing I am sharing – the infographic by Harvard Business Review on how we started using AI in 2025 compared to 2024:

438 views

Replies

AI is great for speed and accuracy, but I trust humans more for judgment, ethics, and emotional intelligence.

@zulma_ Maybe I only have bad experiences with people. 🤷♀️

💭Direct answers:

Use AI as a supplement to emotional support, not a replacement.

Maintain conscious awareness of the information being shared. I have found that after sharing personal information with AI for the first time, subsequent sharing becomes easier and easier.🤔

Undertake regular 'digital detoxes' to ensure I remain close to my friends.

What do you think? Do you find you tend to “over-share” when using AI?🤯

@partick_support Oh boy, yes, I overshare :D so I admire people who could resist. Bonus points you have digital detox time for you :)

Interesting topic Nika, as always!

Direct answers:

No! (Trust no one!)

Many (probably most) people think that when they are "chatting" with Ai, they're not providing that information to anyone because it's just an "artificial" machine!...

I'm not sharing too much with Ai but, maybe you are! :)))

Thanks for sharing this nice infographic.

@terabitcoins Hahaha, true, I overshare with it. :D But as you said, trust as little as possible. It really remembers my previous conversations when I am logged in with my account, and it is scary (and amazing) at the same time.

@busmark_w_nika , Thanks for sharing a nice infographic. I think we have thin line today between share and overshare. As long as we treat AI as just another tool that helps in boosting overall productivity, we should be ok. Agree with others, it doesn't understand the nuances the way humans do.

@chandra_shekhar20 Thank you for your point of view. Currently, nuances are not understood, but this could change soon. If we provide access to a video camera and microphone for conversation, OpenAI could potentially detect our facial expressions, gestures, tone of voice, and context, thereby constructing a complete picture of how authentic we are...

this reminded me of: https://www.forbes.com/sites/bryanrobinson/2025/02/19/gen-z-trust-ai-over-humans-in-their-careers-new-study-shows/

@borja_diazroig Such a paradox. AI takes them job and they love it :D

Kalyxa

Great observation. I think part of it is that AI feels like a mirror, not a judge. People open up because it listens without ego, memory, or bias — something even close friends struggle to do. But you're right, we trade vulnerability for convenience, and that trust is fragile. The question isn’t just can we trust AI — it’s who we’re really trusting behind the scenes.

@parth_ahir That's the point. When someone has difficulty communicating something to another person, it is a problem of communication. But be honest, communication with some people is sometimes impossible (especially with those who blindly believe in something, such as politics).

I am also an AI user. I am okay with sharing my sensitive things, but not all my sensitive things I share. I still sort it out.

@gladys_tisha We all are trying our best not to overshare. :D But sometimes it is not successful, lol :D

Well, if AI makes mistakes, it easily accepts it and tries to provide correct answer. I can't tell the same thing for humans, it is really hard to change their minds! 😂

@isibol01 Yeah, some people are stubborn and not able to say that they did a mistake :D

Headliner

The shift YoY is insane!

I feel like sharing with AI removes a layer of judgement or embarrassment for people. Saying something to another human can certainly cause someone to hold back or even alter what they would say, but saying it to a 'not real' thing in the computer is much easier. Also, AI can be manipulated to say what you want to hear, so it's probably comforting to many to just talk to an LLM.

Additionally, I don't think there is a ton of general education on how to and how to not use AI. 100% AI is hackable, but I don't think there are any well-known cases in which someone actually had a negative consequence for using AI for personal purposes. I would think as more instances came to light, more people would become aware of the potential dangers and (hopefully) hesitate to share so freely.

@elissa_craig I feel that. AI is without prejudice, so I feel more comfortable asking for suggestions/advice even on personal things. But I hope it will not be used against me. :D

such a thoughtful observation and that HBR infographic really brings it home.

I think one reason people feel safer sharing with AI (even personal stuff) is because there's no fear of being judged. Unlike human conversations, where there’s always that little voice worrying “what will they think?”, AI feels neutral like a digital diary that talks back.

But you're right this comfort can blur the line between trust and over-trust. The perception is: “It’s just a tool, so it won’t gossip or hold it against me.” But what many don’t consider is where that data goes or how secure it truly is.

Maybe the real question is: Are we trusting AI… or are we escaping the complexities of human interaction?

Super curious to hear how others here navigate this!

@priyanka_gosai1 I think that at the moment, not many people behind AI are interested in my data, but it can change in the future. Especially those very sensitive data like health.

MoneyVision

@timobechtel You are just on time! 😄 No. 2 is slightly contradictory because I am fairly certain this conversation has a history that can be found somewhere in the server on the other side, but I understand that, at this point, there is no significant judgement by AI. :)

I don't think the question itself has an answer. Whether it's a human or AI, we need to make subjective judgments and cross-validate when acquiring information, otherwise it's worthless

@hi_caicai What do you prefer? To collaborate with AI or not? I think that this one can have a clear answer :)

Of course I like working with AI, you just need to be clear about their capabilities

It's an interesting paradox. From my perspective, I see AI primarily as an information aggregator and don't personally view it as a substitute for human emotional support.

However, I can tell you that Data Privacy is just a myth. We are all willingly or unwillingly giving away our data to all major companies in the world. So, to me, sharing with an LLM doesn't feel significantly different or riskier than other online activities.

@ramitkoul I saw this image on Twitter: https://x.com/sergeynazarovx/status/1927692919835664870

Your words reminded me this post :D

If trust in AI increases, I wonder if people will become more inclined to talk to personal AI assistants about their daily lives and issues.

@djamillio_heij IMO it will become a normal part of our lives. Tech people are innovators tapping the water.

Product Hunt

Well, based on that infographic, I use AI very differently than most people haha. I'd say my usage is 95% data/coding related and writing cleanup/brainstorm. For anything personal, I'm definitely on team human.

@jakecrump I think that you got it right. Data-related things are good to abstract from (or thanks to) ChatGPT.

On the other hand, I feed ChatGPT with personal data, so I am a good sample for your surveys/experiments/data abstraction. :D The universe is balanced.

It's a long debate but I still prefer to use remote staffing to manage AI tasks so human and AI are total different subjects in some fields.

I reckon it's a combination of the lack of consequences when over-sharing with a third party (the faceless chat aspect also contributes to that - it's easier to divulge private info over a phone call than with cameras on) and the speed of response that validates the situation while offering some break to the paralysis or thought spiral.

@ranahmbg Probably. I have noticed one pattern (at least in myself): the better I know a person, the more embarrassed I feel about sharing something private.

Pokecut

While AI might feel like a safe confidant, it’s really better suited for crunching data and tackling practical tasks than for handling our messy, deeply human emotions. For those personal, raw moments, humans still hold the edge, with all our flaws and warmth intact.

Pokecut

Hi Nika, you’ve raised a really thought-provoking point. I think the trust people place in AI, especially for sensitive topics like health or personal relationships, often comes from the perception of anonymity and non-judgmental interaction. Unlike humans, AI doesn’t have emotions or biases, so people might feel safer opening up without fear of stigma or gossip.

However, as you mention, the underlying data privacy risks are very real. AI systems can be vulnerable to breaches, and users might not fully understand how their data is stored or used. This disconnect between perceived safety and actual risk is something we as a community—and product builders—need to address more transparently.

Ultimately, fostering informed trust means educating users about privacy, data security, and giving them control over their information. It’s a delicate balance between leveraging AI’s benefits and protecting user privacy. Thanks for sparking this important conversation!