Llama

Meta's open-source family of LLMs

4.9•72 reviews•1.3K followers

Meta's open-source family of LLMs

4.9•72 reviews•1.3K followers

1.3K followers

1.3K followers

Launched on December 13th, 2024

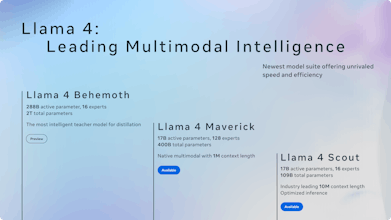

Llama, developed by Meta, is praised for its open-source nature and efficiency in handling complex language tasks. It is highly regarded for its language understanding and versatility, making it a popular choice for various AI applications. Maker reviews highlight its integration in platforms like Instagram, Daily.co, and MindPal, emphasizing its effectiveness in conversation handling and AI agent development. Users appreciate its cost-effectiveness and adaptability, making it a strong competitor in the AI landscape.