Symbol

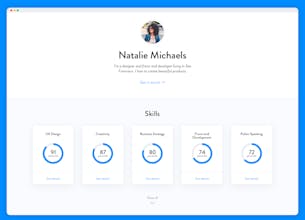

A new, universal way to prove your skills

3 followers

A new, universal way to prove your skills

3 followers

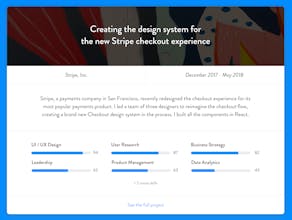

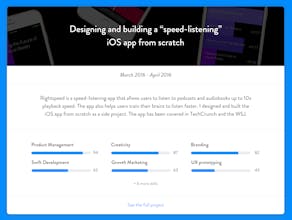

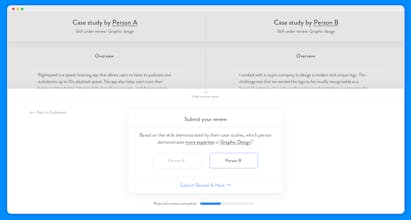

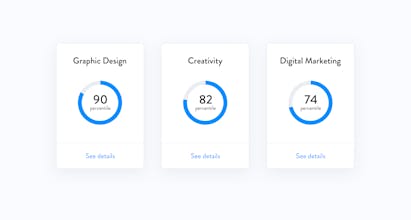

Publish a beautiful portfolio of your work and see where you rank globally for any of your skills. Rankings are free and over 95% accurate.

I feel like there's not really a need for a tool like this.

Pros:Nice interface... i guess?

Cons:Can we all stop using percentiles to measure skills?

Monthly

Monthly