Launched on December 13th, 2024

Llama, developed by Meta, is praised for its open-source nature and efficiency in handling complex language tasks. It is highly regarded for its language understanding and versatility, making it a popular choice for various AI applications. Maker reviews highlight its integration in platforms like Instagram, Daily.co, and MindPal, emphasizing its effectiveness in conversation handling and AI agent development. Users appreciate its cost-effectiveness and adaptability, making it a strong competitor in the AI landscape.

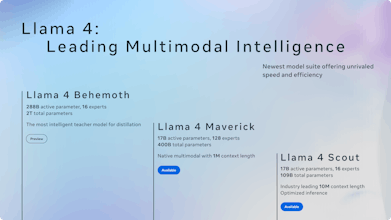

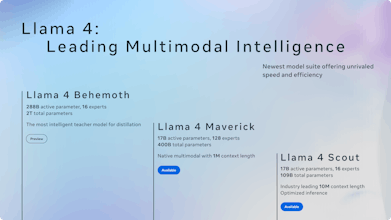

The new herd of Llamas from Meta:

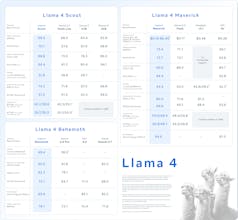

Llama 4 Scout:

• 17B x 16 experts

• Natively multi-modal

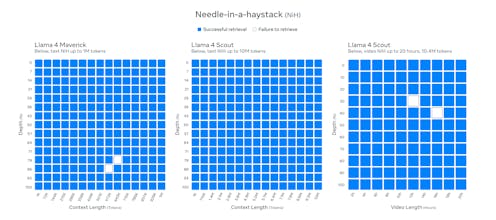

• 10M token context length

• Runs on a single GPU

• Highest performing small model

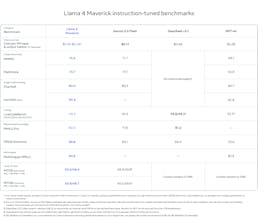

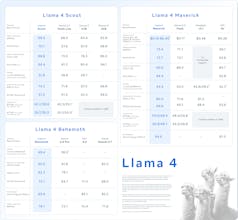

Llama 4 Maverick:

• 17B x 128 experts

• Natively multi-modal

• Beats GPT-4o and Gemini Flash 2

• Smaller and more efficient than DeepSeek, but still comparable on text, plus also multi-modal

• Runs on a single host

Llama 4 Behemoth:

• 2+ trillion parameters

• Highest performing base model

• Still training!

Toki: AI Reminder & Calendar

@chrismessina Just wanna leave a thread here: Llama 4 joke collectors now gather! ...

But besides we love the jokes/memes, Llama is great, people just expected 4 to be better. Fight really hard for 5 Meta!

And definitely thanks for hunting Chris!

Mailgo

Impressive launch for Llama 4! Curious though—how do you manage efficiency and latency challenges with the mixture-of-experts setup, especially in real-time multimodal applications? @ashwinbmeta

Strawberry

Can't wait to try this out. We're experimenting with running models on-device for our product (desktop app) but haven't been able to get great results yet for the average laptop. Looking forward to see the reality of inference speeds for these models.

@sebastian_thunman I say Strawberry, think it is insane!