LangChain enables LLM models to generate responses based on the most up-to-date information available online, and also simplifies the process of organizing large volumes of data so that it can be easily accessed by LLMs.

With LangChain, you can construct dynamic, data-responsive applications. The open-source framework has empowered developers to build powerful AI chatbots, generative question-answering (GQA) systems, and language summarization tools available today.

In this article, we will do a deep dive on LangChain, how it works, what it’s used for, and more.

What is LangChain?

Simply put, LangChain is an open-source framework for developing applications powered by language models.

It is designed to simplify the process of building these applications by providing a set of tools and abstractions that make it easier to connect language models to other data sources, interact with their environment, and build complex applications.

LangChain is written in Python and JavaScript, and it supports a variety of language models, including GPT-3, Hugging Face, Jurassic-1 Jumbo, and more.

How does LangChain work?

To use LangChain, you will need to have a language model. You can use a public language model, such as GPT-3, or you can train your own language model.

Once you have a language model, you can start building applications with LangChain. LangChain provides a variety of tools and APIs that make it easy to connect language models to other data sources, interact with their environment, and build complex applications.

LangChain works by chaining together a series of components, called links, to create a workflow. Each link in the chain performs a specific task, such as:

- Formatting user input

- Accessing a data source

- Calling a language model

- Processing the output of the language model

The links in a chain are connected in a sequence, and the output of one link is passed as the input to the next link. This allows the chain to perform complex tasks by chaining together simple tasks.

For example, a simple chatbot could be created using a chain of links that performs the following tasks:

The links in a chain can be customized to perform different tasks, and the order of the links can be changed to create different workflows. This makes LangChain a very flexible framework that can be used to build a wide variety of applications.

LangChain is written in Python and JavaScript, and it supports a variety of language models, including GPT-3, Hugging Face, Jurassic-1 Jumbo, and more.

What are the core components of LangChain?

LangChain disassembles the natural language processing pipeline into separate components, enabling developers to tailor workflows according to their needs.

Here are the core components of LangChain:

Prompt templates

Prompt templates are used to format user input in a way that the language model can understand. They can be used to add context to the user's input, or to specify the task that the language model should perform. For example, a prompt template for a chatbot might include the name of the user and the user's question.

LLMs

LLMs are large language models that are trained on massive datasets of text and code. They can be used to perform a variety of tasks, such as generating text, translating languages, and answering questions.

Indexes

Indexes are databases that store information about the LLM's training data. This information can include the text of the documents, their metadata, and the relationships between them. Indexes are used by retrievers to find the information that is relevant to a user's query.

Retrievers

Retrievers are algorithms that search the index for specific information. They can be used to find documents that are relevant to a user's query, or to find the most similar documents to a given document. Retrievers are important for improving the speed and accuracy of the LLM's responses.

Output parsers

Output parsers are responsible for formatting the responses generated by the LLM. They can remove unwanted content, add additional information, or change the format of the response. Output parsers are important for ensuring that the LLM's responses are easy to understand and use.

Agents

Agents are software programs that can reason about problems and break them down into smaller sub-tasks. Agents can be used to control the flow of a chain and to make decisions about which tasks to perform. For example, an agent could be used to determine whether a user's question is best answered by a language model or by a human expert.

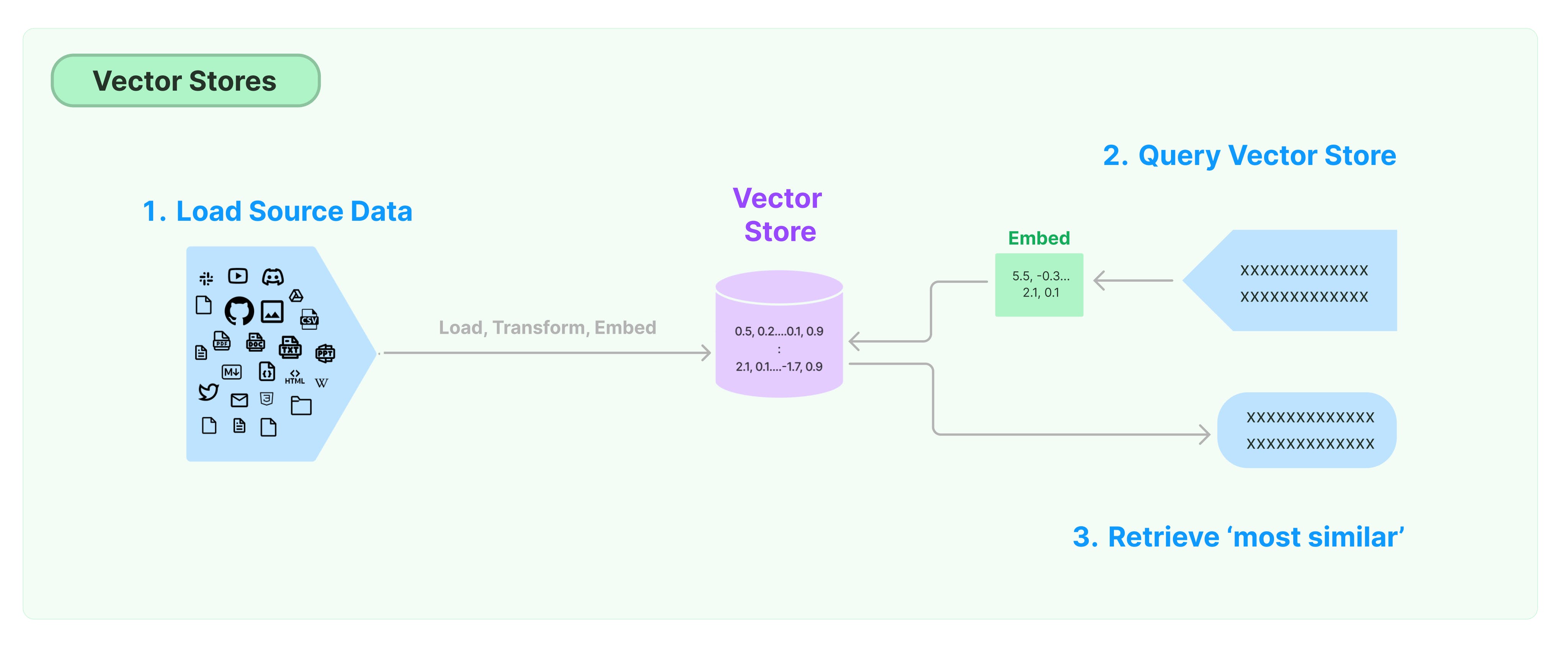

Vector stores

Vector stores are databases that store vectors, which are mathematical representations of words and phrases. Vector stores can be used to find similar words and phrases, which can be helpful for tasks such as question answering and summarization. For example, a vector store could be used to find all of the words that are similar to the word "cat".

Vector stores

Advantages of using LangChain

Ease of use: LangChain provides a high-level API that makes it easy to connect language models to other data sources and build complex applications.

Flexibility: The framework can be used to build a wide variety of applications, from chatbots to question-answering systems.

Scalability: LangChain can be used to build applications that can handle large amounts of data.

Open-source: As an open-source framework, LangChain is free to use and modify.

Community support: There is a large and active community of LangChain users and developers who can provide help and support.

Documentation: Documentation is comprehensive and easy to follow.

Integration with other frameworks: LangChain can be integrated with other frameworks and libraries, such as Flask and TensorFlow.

Extensibility: The framework is extensible, so developers can add their own features and functionality.

How to use LangChain

1. Install LangChain

You can install LangChain using the pip command:

pip install langchain

2. Create a new project

You can create a new LangChain project by creating a new directory and initializing it with the following command:

langchain init

3. Import the necessary modules

You will need to import the following modules to use LangChain:

from langchain import Chain, Link

4. Create a chain

A chain is a sequence of links that perform a specific task. To create a chain, you need to create an instance of the Chain class and add links to it. For example, the following code creates a chain that calls a language model and gets its response:

chain = Chain()

chain.add_link(Link(model="openai", prompt="What is the meaning of life?"))

5. Run the chain

To run a chain, you need to call the run() method on the chain object. For example, the following code runs the chain that was created in the previous step:

chain.run()

6. Get the output of the chain

The output of a chain is the output of the last link in the chain. To get the output of the chain, you can call the get_output() method on the chain object.

For example, the following code gets the output of the chain that was created in the previous step:

output = chain.get_output()

7. Customize the chain

You can customize the chain by adding or removing links, or by changing the parameters of the links.

What applications can be built with LangChain?

Chatbots

LangChain can be used to build chatbots that can answer questions, provide customer service, or even generate creative text formats, like poems, code, scripts, musical pieces, email, letters, etc.

Question answering systems

LangChain can be used to build question answering systems that can access and process information from a variety of sources, including databases, APIs, and the web.

Summarization systems

LangChain can be used to build summarization systems that can generate summaries of news articles, blog posts, or other types of text.

Content generators

LangChain can be used to build content generators that can generate text that is both informative and engaging.

Data analysis tools

LangChain can be used to build data analysis tools that can help users to understand the relationships between different data points.

Is LangChain open source and free to use?

Yes, LangChain is open source and completely free to use. You can download the source code from GitHub and use it to build your own applications.

You can also use the pre-trained models that are available on the LangChain website.

How to install LangChain

The source code for LangChain is available on GitHub. You can download the source code and install it on your computer. LangChain is also available as a Docker image, which makes it easy to deploy it on a cloud platform.

Alternatively, you can install it with a simple pip command on Python:

pip install langchain

If you would like to install all the dependencies for LangChain that are used for integrations, run the following command instead:

pip install langchain[all]