The chip company is usually associated with high-end gaming, but since the rise of AI, it has become a powerhouse of future tech, and thanks to the AI chip boom, it’s now worth more than Alphabet and Amazon after hitting a market cap of $1.83 trillion.

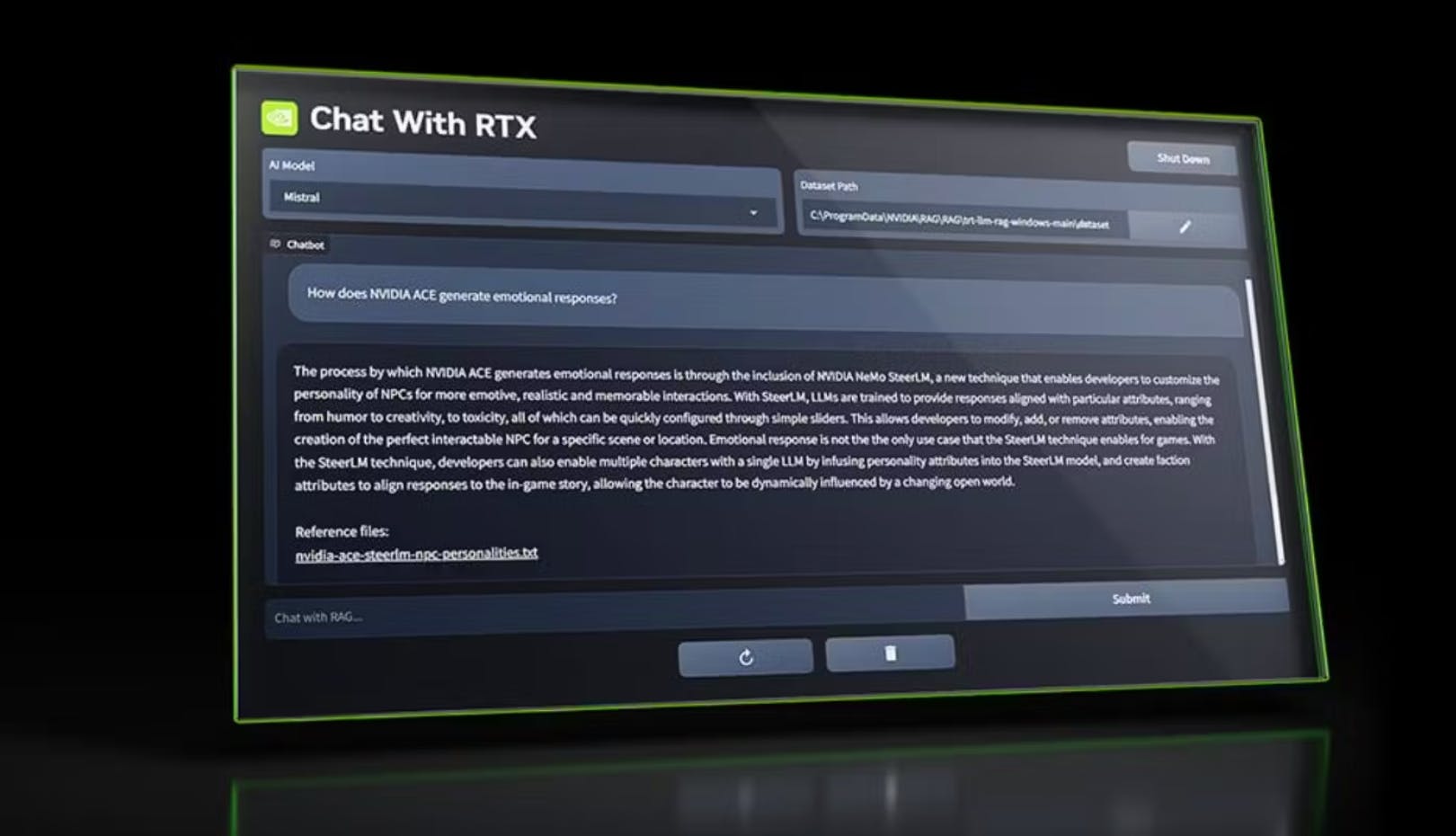

Now, Nvidia has decided to dabble in consumer-facing AI with the launch of Nvidia Chat with RTX. It’s a demo app (currently available for certain Windows machines) that lets you build a custom GPT large language model (LLM) that’s connected to your docs, notes, videos, and other data sources.

What’s the big deal? It’s a fair question and something I asked myself. After all, it’s not like we are starving for choices regarding chatbots. The big difference here is that it's localized to your machine, unlike, say, ChatGPT, which is based on a dataset and the internet.

Chat with RTX instead relies on your chosen data to build an LLM customized to your needs. It’s particularly adept at things like quickly scanning PDFs, summarizing documents, and you can even paste a YouTube URL to search for and pinpoint specific points throughout a video

As mentioned, it is only a demo, and that comes with drawbacks. According to the Verge, source attribution is not always accurate, and it doesn’t have conversational memory in contrast to OpenAI, which is testing the ability to turn memory on or off.

All in all, though, it’s an interesting experiment into what localized AI could look like in the near future, especially when considering potential privacy concerns and how machine-local AI solutions could solve them.

This article originally appeared in the Product Hunt Daily Digest. Subscribe here.