Yesterday, OpenAI announced that GPT-4 — their most advanced large language model (LLM) yet — is now available to paid ChatGPT+ subscribers and within the OpenAI API, which has a waitlist.

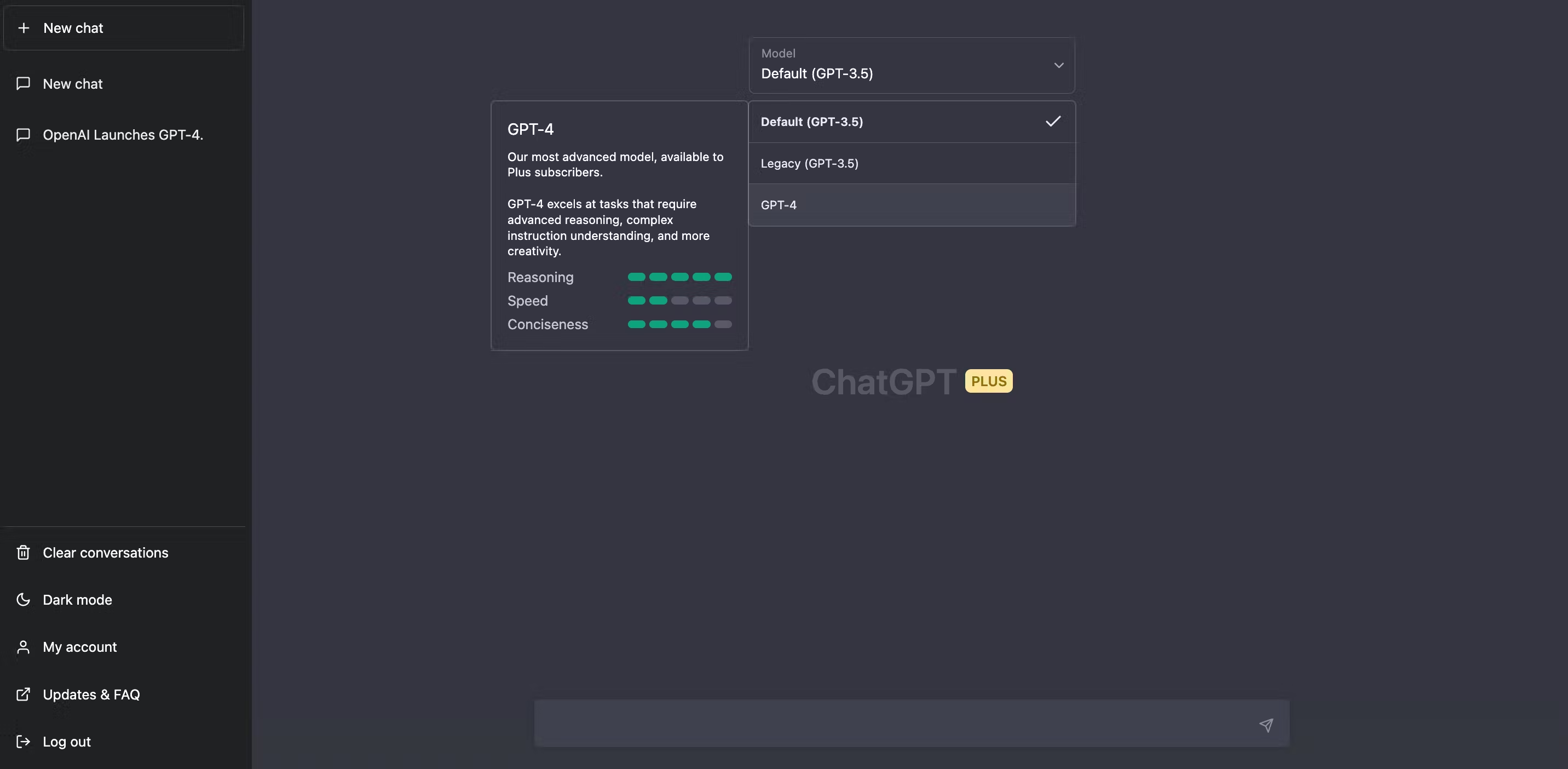

Here's what GPT-4 currently looks like in ChatGPT+:

In the hours after its launch, early users tweeted in amazement as they used GPT-4 to recreate the game of Pong in under 60 seconds, create 1-click lawsuits, and turn hand-drawn sketches into websites.

GPT-4's advanced input capabilities

GPT-4 is multimodal, meaning it can accept both text and image inputs. Image input capability, however, is not yet available in the ChatGPT+ version of GPT-4 or within the API. OpenAI says they are working with a single partner called be my eyes to prepare this feature for wider availability.

We’re also open-sourcing OpenAI Evals, our framework for automated evaluation of AI model performance, to allow anyone to report shortcomings in our models to help guide further improvements.

GTP-4's superior outputs and user experience

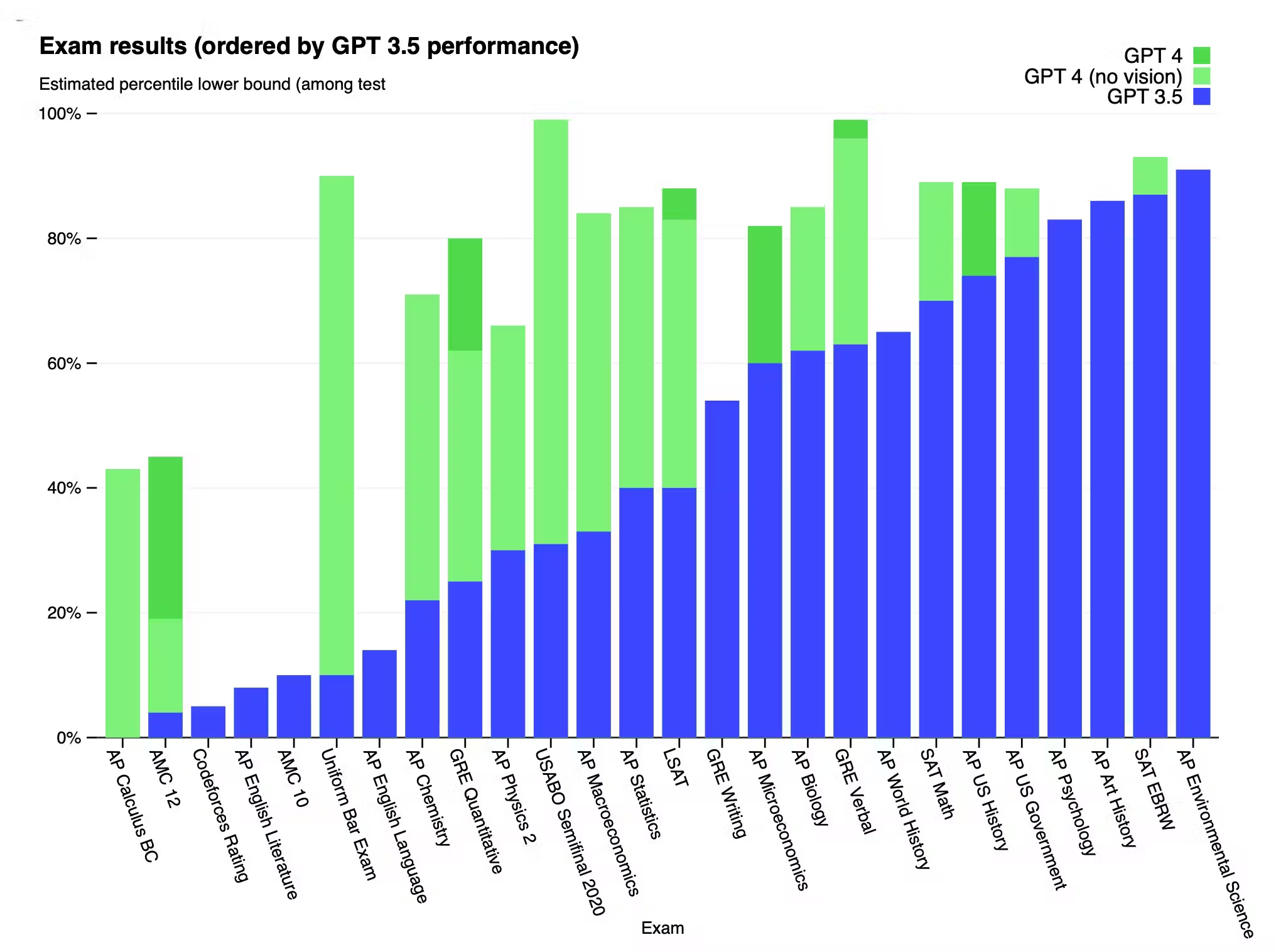

OpenAI used exams like the Bar and the LSATs to prove GPT-4 is smarter than GPT-3.5 but stressed it’s not fully reliable and will still “hallucinate facts and make reasoning errors.”

The difference comes out when the complexity of the task reaches a sufficient threshold—GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5.

OpenAI, Microsoft, and monetization

Microsoft revealed that Bing AI has been using a tuned version of GPT-4 all along. By licensing GPT-4 for integration into Microsoft products, collaborating on joint research projects, and leveraging Microsoft's cloud infrastructure, OpenAI is nicely positioned to generate revenue while expanding its reach.

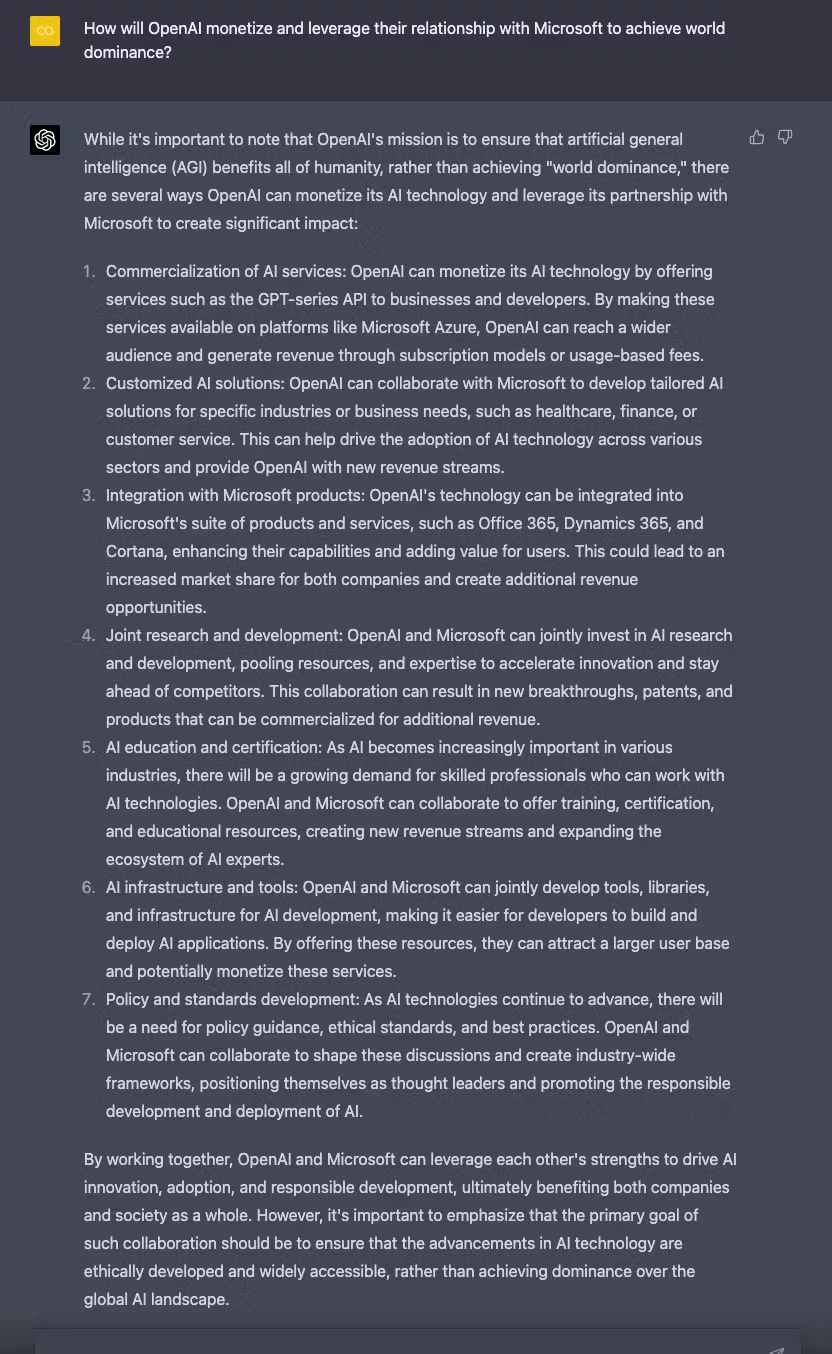

ChatGPT-4 also has some interesting ideas for how exactly OpenAI might continue to leverage their relationship with Microsoft to grow the business further:

Risks and concerns associated with GPT-4

The Atlantic recently published a piece entitled “Why Are We Letting the AI Crisis Just Happen?” and if you ask ChatGPT-4 to play devil’s avocado and outline the risks associated with this type of technology improving this much, this fast, its answer is comprehensive:

"The rapid development of powerful AI technologies like GPT-4 brings several significant risks that must be carefully considered and managed:

1. Misuse and malicious applications: Advanced language models can be weaponized to create deepfakes, disinformation, spam, or manipulative content, exacerbating the spread of fake news and misinformation. They may also be utilized for social engineering attacks or cyber threats, potentially causing significant harm to individuals and organizations.

2. Bias and discrimination: AI models learn from large datasets, which often contain historical biases and prejudices. If not properly mitigated, these biases can be perpetuated and amplified by the AI, leading to unfair and discriminatory outcomes in applications such as hiring, lending, or advertising.

3. Job displacement and labor market disruption: AI-powered automation can lead to significant job displacement in industries that rely heavily on repetitive or routine tasks. This could exacerbate income inequality and create social unrest if not accompanied by adequate support for workforce retraining and upskilling.

4. Privacy and surveillance: AI technologies can be employed for mass surveillance or intrusive data collection practices, potentially eroding privacy and civil liberties. This is especially concerning if the technology is used by governments or other entities without adequate oversight or public accountability.

5. Centralization of power and digital divide: The development and control of advanced AI technologies are often concentrated among a few large tech companies or governments. This can lead to a centralization of power and exacerbate existing digital divides, limiting access to the benefits of AI for marginalized communities or smaller organizations.

6. Ethical and moral concerns: As AI systems become more advanced and integrated into our lives, they may be tasked with making complex decisions involving human values and ethics. There is a risk that these decisions may not align with societal norms or values, leading to morally questionable outcomes.

7. Autonomous systems and accountability: The increasing autonomy of AI systems raises concerns about accountability and responsibility, particularly when AI-driven actions result in harm or unintended consequences. Determining who is liable for these outcomes becomes increasingly challenging as AI systems become more complex and autonomous."

To address these risks, it is crucial for AI developers, businesses, and governments to collaborate on responsible AI development practices, robust regulatory frameworks, and transparent monitoring systems that ensure the safe and ethical use of AI technologies.

Review GPT-4

Have you tried GPT-4? Let the community know if you think it’s worth the 20 bucks a month for instant access to try out the new model’s text-only input capabilities.