Qwen2.5-Max

Large language model series developed by Alibaba Cloud

84 followers

Large language model series developed by Alibaba Cloud

84 followers

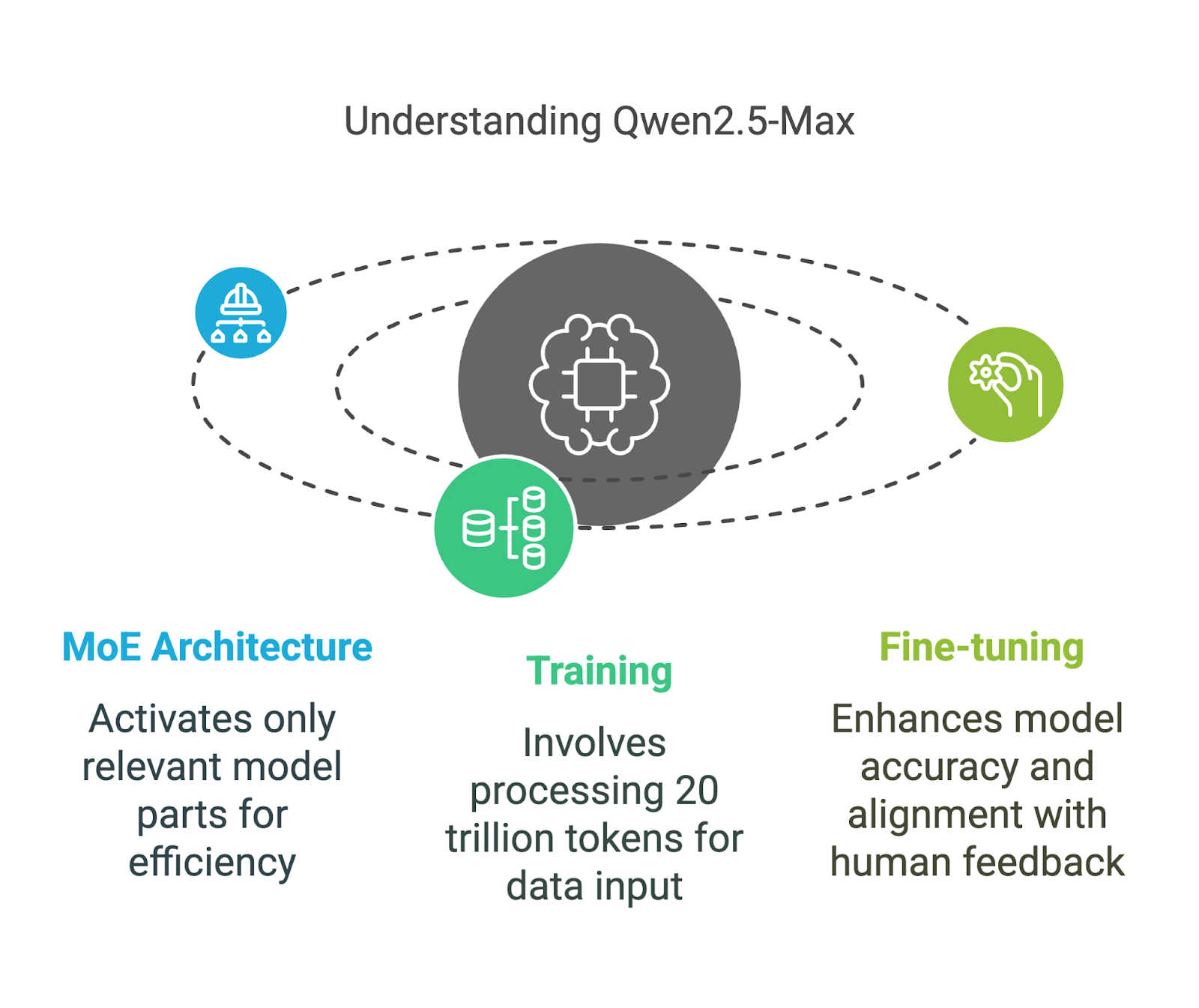

Qwen2.5-Max is a large-scale AI model using a mixture-of-experts (MoE) architecture. With extensive pre-training and fine-tuning, it delivers strong performance in benchmarks like Arena Hard, LiveBench, and GPQA-Diamond, competing with models like DeepSeek V3.