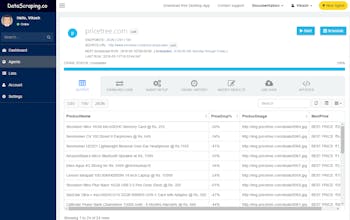

Data Scraping Studio (v 1.7)

Crawl password protected websites using CSS Selectors

3 followers

Crawl password protected websites using CSS Selectors

3 followers

This is the 2nd launch from Data Scraping Studio (v 1.7). View more

Online Web Scraper

Launch Team

idm.in

idm.in

idm.in

idm.in

Retime

idm.in

Retime

idm.in