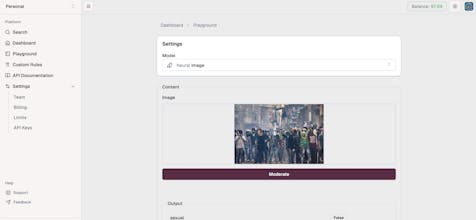

SeyftAI is a real-time, multi-modal content moderation platform that filters harmful and irrelevant content across text, images, and videos, ensuring compliance and offering personalized solutions for diverse languages and cultural contexts.

Subscribe

Sign in

SeyftAI

Wallafan

SeyftAI

SeyftAI