Open AI o3

Open AI next-gen reasoning model

215 followers

Open AI next-gen reasoning model

215 followers

Today OpenAI announced o3, its next-gen reasoning model. We've worked with OpenAI to test it on ARC-AGI, and we believe it represents a significant breakthrough in getting AI to adapt to novel tasks.

This is the 2nd launch from Open AI o3. View more

OpenAI o3 and o4-mini

OpenAI o3 and o4-mini are reasoning models that think with images & agentically use tools (Search, Code, DALL-E). SOTA multimodal performance. Available in ChatGPT & API.

Free Options

Launch Team

Hi everyone!

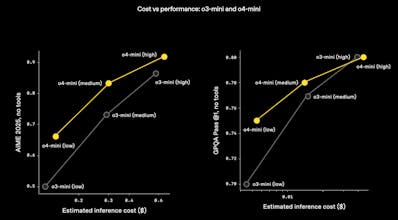

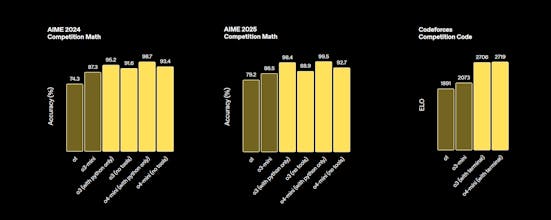

OpenAI brings their next-generation reasoning models, o3 and o4-mini, and they come with two major advancements.

First, they introduce "Thinking with Images". These models don't just see images; they can apparently manipulate them (crop, zoom, rotate via internal tools) as part of their reasoning process to solve complex visual problems, even with imperfect inputs like diagrams or handwriting. This is pushing them to SOTA on multimodal benchmarks.

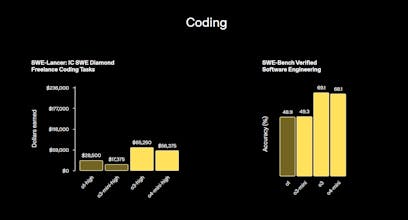

Second, building on that, they have full agentic tool access. They intelligently decide when and how to use all available tools: Search, Code Interpreter, DALL-E, and these new image manipulation capabilities. Often chaining them together to tackle multi-faceted tasks.

Quick model difference: (because the naming puzzle, right?:))

o3: The most powerful, particularly excels at complex visual reasoning.

o4-mini: Optimized for speed and efficiency, still very capable across tasks.

Both models have better instruction following and more natural conversation using memory. They're rolling out now in ChatGPT and available via the API. (OpenAI also released the open-source Codex CLI alongside).

Loving the visual reasoning features! 👍

The capabilities of o3 and o4-mini are seriously impressive—multimodal reasoning combined with tool usage takes things to a whole new level. Exciting to see how these models expand what's possible with real-time search, code execution, and visual understanding. Huge leap forward!