Ollama v0.7 introduces a new engine for first-class multimodal AI, starting with vision models like Llama 4 & Gemma 3. Offers improved reliability, accuracy, and memory management for running LLMs locally.

This is the 2nd launch from Ollama v0.7. View more

Ollama Desktop App

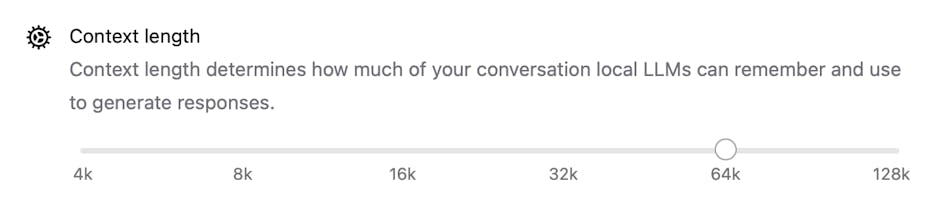

Ollama's new official desktop app for macOS and Windows makes it easy to run open-source models locally. Chat with LLMs, use multimodal models with images, or reason about files, all from a simple, private interface.

Free

Launch Team

Hi everyone!

When Ollama walks out of your command line and starts interacting with you as a native desktop app, don't be surprised :)

This new app dramatically lowers the barrier to running top open-source models locally. You can now chat with LLMs, or drag and drop files and images to interact with multimodal models, all from a simple desktop interface. And most importantly, it's Ollama, which is one of the most trusted and liked products for users who care about privacy and data security.

Bringing the Ollama experience to people who aren't as comfortable with the command line will undoubtedly accelerate the adoption of on-device AI.

Visla

@zaczuo Love it!!!! I've been an Ollama user for a while now, and have told many others about it, but they've never been as comfortable with it, so just finished sending this out to everyone I know! 💪

Needs MCP and agentic features. Maybe soon? 🙏

Orango

@marco_visin Agentic use is perfect for local models. As you don't need speed. You can Que up some tasks overnight in different branches. And let it cook.

Orango

CNVS

That's a significant update! Thank you for your product, really like it 🙌

Will the UI be open source as well, so we can adjust/modify the way it works?