Langfuse

Open Source LLM Engineering Platform

5.0•52 reviews•2.1K followers

Open Source LLM Engineering Platform

5.0•52 reviews•2.1K followers

Langfuse is an open-source LLM engineering platform that helps teams collaboratively debug, analyze, and iterate on their LLM applications. All platform features are natively integrated to accelerate the development workflow.

Langfuse is open. It works with any model, any framework, allows for complex nesting, and has open APIs to build downstream use cases.

Docs: https://langfuse.com/docs

Github: https://github.com/langfuse/langfuse

This is the 4th launch from Langfuse. View more

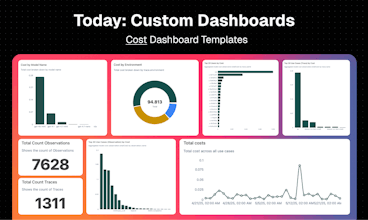

Langfuse Custom Dashboards

Langfuse is an open-source LLM engineering platform that helps teams collaboratively debug, analyze, and iterate on their LLM applications. All platform features are natively integrated to accelerate the development workflow.

Free Options

Launch Team / Built With

Having all debugging and analysis tools integrated in one platform saves so much time and hassle.

Langfuse

@supa_l agree, that was the core motivation to build Langfuse in the first place when we were building with LLMs in 2023. Building great LLM Applications needs constant evaluation and iteration based on application traces, user feedback, llm as a judge etc

Langfuse

@supa_l let me know if you have any feedback or questions!

Langfuse

Hey Product Hunt 👋

I’m Clemens, co-founder of Langfuse. We are so excited to be live on Product Hunt again today, launching one of the most requested features yet: Custom Dashboards!

Building useful LLM applications and agents requires constant iteration and monitoring. Langfuse helps with tracing, evaluation, and prompt engineering tools that we’ve launched over the past two years (see our previous launches).

Custom Dashboards turn raw LLM traces into actionable insights in seconds. Spin up and save custom views that show the numbers you care about and keep every team on top of what matters most. This includes quality, cost, and latency metrics.

We work with thousands of teams building leading LLM applications. Based on this experience, we are launching a set of Curated Dashboards to help you get started:

Cost Management: Average cost per user, total cost per model provider

Latency Monitoring: P95 latency per model vendor, slowest step in agent applications

Evaluation: User feedback tracking, LLM-as-a-judge values (correctness, hallucinations, etc.)

Prompt Metrics: Identify high-performing prompts and prompt changes that caused issues

And because insights shouldn’t stay locked in a UI, we’re introducing a Query API endpoint. All traces visible in Langfuse can now be fetched and aggregated via this endpoint and piped into any downstream application. This enables you to:

Build embedded analytics directly into your application

Consume metrics in your analytics stack

Power features like rate limiting or billing for your own users

Try it:

⭐ GitHub: https://github.com/langfuse/langfuse

⏯️ Interactive Demo: https://langfuse.com/demo

📒 Docs: https://langfuse.com/docs

💬 Discord: https://langfuse.com/discord

A few other things you get with Langfuse:

👣 Tracing: SDKs (TS, Python) + OTel + integrations for OpenAI, LangChain, LiteLLM & more

✏️ Prompt Management: version, collaborate, and deploy prompts

⚖️ Evaluation: dataset experiments, LLM-as-judge evals, prompt experiments, annotation queues

🕹️ Playground: iterate on prompts, simulate tool use and structured outputs

🧑🤝🧑 Community: thousands of builders in GitHub Discussions & Discord

Thanks again, PH community—your feedback shaped this release. We’ll be here all day; show us the dashboards you find most insightful and let’s keep building!

Super

So excited for this launch folks! The Langfuse is truly one of the most impressive I've followed in ages, constantly listening and building the right tools for AI builders, this is just another milestone on this journey 🔥

Langfuse

@christophepas thanks a lot Christophe. Just saw that you are launching super.work, congrats!

Let me know if you have any feedback/questions regarding langfuse