Hallucina-Gen

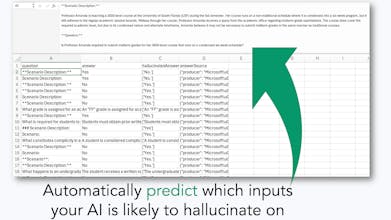

Spot where your LLM might make mistakes on documents

40 followers

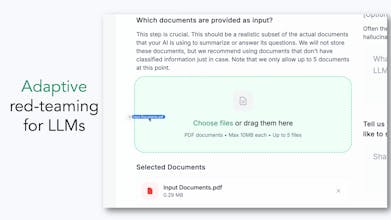

Using LLMs to summarize or answer questions from documents? We auto-analyze your PDFs and prompts, and produce test inputs likely to trigger hallucinations. Built for AI developers to validate outputs, test prompts, and squash hallucinations early.

FairPact AI

It might be useful, good work👍

FairPact AI

Hey there, awesome makers! 👋

We’re super excited to share our new tool that helps catch those tricky AI mistakes in your document-based projects. Give it a try and let us know what you think!