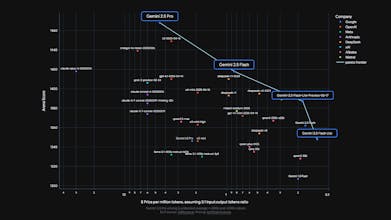

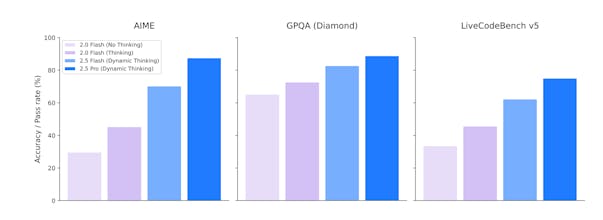

Gemini 2.5 Flash, is now in preview, offering improved reasoning while prioritizing speed and cost efficiency for developers.

This is the 2nd launch from Gemini 2.5 Flash. View more

Gemini 2.5 Flash-Lite

Gemini 2.5 Flash-Lite is Google's new, fastest, and most cost-efficient model in the 2.5 family. It offers higher quality and lower latency than previous Lite versions while still supporting a 1M token context window and tool use. Now in preview.

Launch Team

Impressive to see Google optimizing not just for intelligence but also for speed and cost. Does Gemini 2.5 Flash-Lite offer any fine-tuning or custom instruction capabilities for enterprise-level workflows?

All the best for the launch @sundar_pichai & team!