Flapico

Prompt versioning, testing, and evaluation

123 followers

Prompt versioning, testing, and evaluation

123 followers

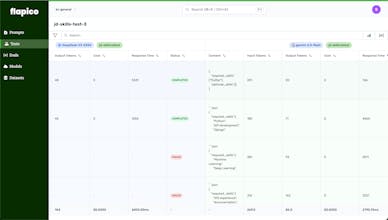

Flapico lets you version, test & evaluate your prompts, and makes your LLM apps reliable in production. 🔓 Decouple your prompts from your codebase 📊 Quantitative tests, instead of guesswork 💻 Have your team collaborate on writing & testing prompts

Flapico

Hi everyone,

I am excited to launch Flapico. Over last couple of years, I've been working with different companies to build & ship LLM apps. I saw some common problems everywhere, irrespective of the size of the company.

- People unsure about where to keep prompts (some track in git, which makes it messy).

- Functional teammates sharing prompts over Teams & Slack, so it can be integrated by developers.

- The selection of LLM (and prompt) is often a gut-feeling or a guesswork, instead of numbers.

Flapico addresses these issues, and more. Being a developer, I know I never want to change my development paradigm. That's why Flapico doesn't aim to replace your existing tools or methods. Instead it exists to solve just the right problems.

It gives me joy to see how functional team members (like domain experts) are collaborating with developers on Flapico.

I'd love to hear your thoughts, and feedback.

Feel free to reach out at adarsh[at]flapico[dot]com

Kalyxa

@adarsh_punj

Totally get this — I’ve seen the same mess with prompts floating around Slack and no real system to track what works. Love that you’re not trying to reinvent the dev workflow but just solve the annoying parts. Curious to see how teams use it in the wild.

Flapico offers a clever way to take control of your LLM prompts—versioning, testing, and evaluating them for reliability in production. I love how it replaces guesswork with real data and supports team collaboration, making prompt management way more professional and efficient.

Flapico

@supa_l Thanks!

Flapico is a must-have tool for anyone working with LLM apps! It allows you to version, test, and evaluate prompts, ensuring your applications are reliable in production. With features like decoupling prompts from the codebase and quantitative testing, it makes collaborating on prompt creation and testing a seamless experience. I’m excited to see how it improves efficiency and accuracy in AI development!