Deepgram

Voice AI platform for developers.

4.8•69 reviews•1.7K followers

Voice AI platform for developers.

4.8•69 reviews•1.7K followers

1.7K followers

1.7K followers

Launched on February 27th, 2025

Deepgram is highly praised for its fast, accurate, and reliable speech-to-text capabilities. It is particularly valued for its real-time transcription and low latency, making it a preferred choice for applications like Shortcut and Daily.co. Users appreciate its ease of integration and the generous free credits offered. The platform's ability to handle multiple languages and provide detailed transcripts is also highlighted, as seen in Vapi. Overall, Deepgram is recognized for its excellent performance and developer-friendly features.

Deepgram

In your video you say that the AI can take in vague instructions and turn into precise instructions. Models like o1 and o3 do that sort-of fine, but the question holds: can it be done in a truly useful way? Would appreciate so much some use cases and other examples to see how it works in your cool app

Deepgram

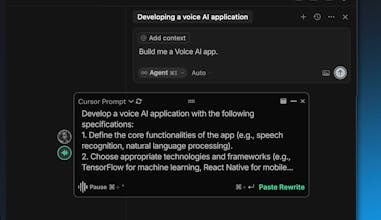

@chan_bartz Great question! Yes, models like o1 and o3 can kind of handle vague input, but they’re inconsistent without the right prompt structure. What Saga does is convert your fuzzy, natural speech into a clean, structured instruction that actually works when passed into tools like Cursor, Replit, or Windsurf. It acts like a pre-processor that speaks “LLM,” so you don’t have to.

Example 1:

You say: “Make a helper to format a date string”

Saga rewrites it into:

Then pipes that into Cursor and gets back usable code on the first try.

Example 2:

You say: “Add error handling to this function”

Saga rewrites it into something like:

We’re seeing devs use it to avoid prompt tinkering and get more consistent results from AI coding tools.

Would love to see how it works for you when you try it out!

Tried it out and this is awesome 🤩