Arch

Build fast, specialized agents with intelligent infra

304 followers

Build fast, specialized agents with intelligent infra

304 followers

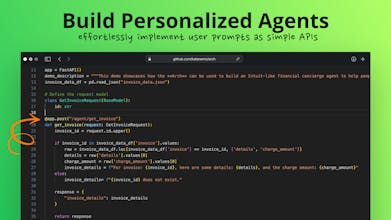

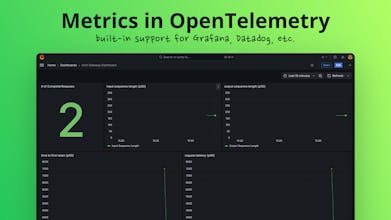

Arch is an intelligent gateway for agents. An AI-native, open source infrastructure project to help developers build fast, hyper-personalized agents in mins. Arch is engineered with specialized (fast) LLMs to transparently integrate prompts with APIs (function calling), to add safety, routing and observability features in secs - so that developers can focus on what matters most.

Arch

Arch

Arch

Arch